Automation Series Summary

In the second part of our series we will be focusing on Power BI and how to access the API through Powershell, as well as the benefits of using the API. We will also discuss a few of the limitations that exist within the API currently that could limit what you want to do with the API.

This is part 2 in the series, if you are looking for the first post you can find it here:

Automation Series 1, Part 1: Service Manager Dashboards in Power BI through Azure Automation

Why Use Power BI When it Comes to Automation

I’m not going to pull your leg and say that Power BI is the only online dash boarding solution out there. Nor is the in the most feature rich, or even the prettiest, but it does have a few key advantages.

First of all it’s free for modest use, and this includes all of the functionality that it does have. The only real limitations come from the amount of online storage your are provided with, the ability to consume live sources without uploading the data to Power BI, and some limitations on the load you can put on the API. The specifics of both of those can be found here:

and

Another great reason to use Power BI is because it is quite user friendly. It’s really pretty quick and painless to build out some pretty charts that are quite useful and then present them via a dashboard that can be access from the mobile app. Literally, within a few minutes you can have a dashboard that managers can view on their mobile phone with live data.

The final reason I chose to focus on Power BI for this series is that it is a Microsoft product, and because of that it receives a lot of press. There are a lot of consultants out there diving into this today and publishing some great information to the web, not to mention building Powershell modules for the platform (PowerBIPS).

The Automation Solution

Let’s take a look at the Power BI API and identify how we are going to be using it’s automation today. In briefly outlining the steps we are going to take, our process will look like this:

- Create / Find the Power BI datasource we want to manipulate

- Create / Update the Power BI table within that datasource

- Load the Incident data into the table

That doesn’t sound to bad now does it? For this particular scenario we are using a lab environment so the data set is relatively small, so rather than complicate the issue at this time by keeping track of what has been updated and what hasn’t each load, we are simply going to clear and refill the entire table each run of the script.

Lets look at our Powershell code now. For this particular script we are using the PowerBIPS Powershell module, which you can download from the link above.

# Import the PowerBIPS Powershell Module: https://github.com/DevScope/powerbi-powershell-modules

Import-Module ".\Modules\PowerBIPS" -Force

Import-Module smlets

#

# Helper Functions

#

#captures debug statements globally

function Debug {

param($text="")

Write-Output $text

}

#region PowerBI Settings

#region username and password

$user = "username"

$pass = "password" | ConvertTo-SecureString -AsPlainText -Force

#endregion

$cred = New-Object -TypeName System.Management.Automation.PSCredential -ArgumentList $user, $pass

$authToken = Get-PBIAuthToken -ClientId "9a49ceec-57d9-4dba-85c6-c74a7362c233" -Credential $cred

#endregion

#

# API CALLS

#

$class = get-scsmclass WorkItem.Incident$

$incidents = Get-SCSMObject -Class $class | select Id, Title, Classification, Status, CreatedDate, LastModified

#

# TRANSFORM

#

$allIncidents = @()

foreach($incident in $incidents){

$obj = @{}

$obj.Id = $incident.Id

$obj.Title = $incident.Title

$obj.Classification = $incident.Classification.DisplayName

$obj.Status = $incident.Status.DisplayName

$obj.Created = get-date($incident.CreatedDate) -Format "yyyy-MM-dd"

$obj.Modified = get-date($incident.LastModified) -Format "yyyy-MM-dd"

$allIncidents += New-Object –TypeName PSObject –Prop $obj

}

#

# POWERBI CONFIGURATION AND UPLOAD

#

# If cannot find the DataSet create a new one with this schema

$incidentTable = @{

name = "IncidentData";

columns = @(

@{

name = "Id";

dataType = "String"

}

,@{

name = "Title";

dataType = "String"

}

,@{

name = "Classification";

dataType = "String"

}

,@{

name = "Status";

dataType = "String"

}

,@{

name = "Created";

dataType = "Datetime"

}

,@{

name = "Modified";

dataType = "Datetime"

}

)

}

$datasetName = "SCSMDataSet"

$dataSetSchema = @{

name = $datasetName;

tables = @(

$incidentTable

)

}

# Test the existence of the dataset

Debug -text ("Working on {0}" -f "Checking DataSet") -action "write"

if (!(Test-PBIDataSet -authToken $authToken -name $datasetName) -and $allIncidents.Count -gt 0)

{

#create the dataset

Debug -text ("Working on {0}" -f "Creating DataSet") -action "write"

$pbiDataSet = New-PBIDataSet -authToken $authToken -dataSet $dataSetSchema #-verbose

Debug -text ("Writing {0} [{1}]" -f "DataSet Values", $allUtil.Count) -action "write"

$allIncidents | Out-PowerBI -AuthToken $authToken -dataSetName $datasetName -tableName $incidentTable.Name -verbose

}

else

{

$pbiDataSet = Get-PBIDataSet -authToken $authToken -name $datasetName

#clear the dataset and update the schema if necessary

Debug -text ("Working on {0}" -f "Clearing DataSet") -action "write"

Clear-PBITableRows -authToken $authToken -dataSetName $datasetName -tableName $incidentTable.Name -verbose

Debug -text ("Working on {0}" -f "Updating DataSet Schema") -action "write"

Update-PBITableSchema -authToken $authToken -dataSetId $pbiDataSet.id -table $incidentTable -verbose

Debug -text ("Writing {0} [{1}]" -f "DataSet Values", $allUtil.Count) -action "write"

$allIncidents | Out-PowerBI -AuthToken $authToken -dataSetName $datasetName -tableName $incidentTable.Name -verbose

}

#endsection

#section OUTPUT

$allIncidents | Export-Csv IncidentsTable.csv -NoTypeInformation

#endsection

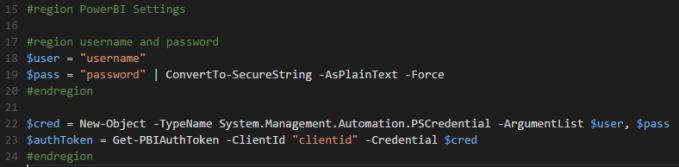

Looking at our code above, lets first look at how we are authenticating with Power BI.

For those of you that are familiar with using credentials in Powershell this is pretty straight forward. We are creating a credential object using a username and a password converted to a secure string. We then create a new credential object and pass that into the PowerBIPS ‘Get-PBIAuthToken’ cmdlet along with the ClientId property and we now have a valid authentication token.

Before we continue on, lets quickly walk through how you get the Client ID that is required for you to pull your authentication token. Most of the required steps are found here:

https://powerbi.microsoft.com/en-us/documentation/powerbi-developer-register-a-client-app/

But one step they have failed to mention that will effect most users is that you first need to make sure that you’ve logged into Power BI with your Azure user ID prior to attempting to add the client application and get your Client ID.

Next, lets look at the code required to create the DataSource and Table Schema:

As you can see in the example, the table is a hashtable with a name, and columns property. The name is a simple string, and the columns property is an array of columns which are hashtables with a name and datatype property. This is pretty straight forward, but be aware that there are only a limited set of data types available (Supported Data Types).

For the dataset itself, it’s more of the same with the dataset variable is a hashtable with a name and tables property. The name is a string and the tables property is an array of table hashes.

Finally, lets look at the code for creating or updating the dataset and the tables:

This is actually quite simple, but looks more complicated due to the debug entries throughout each step. If we were to remove those entries it would compress down to this:

Working through this, we are calling the ‘Test-PBIDataSet’ cmdlet in order to determine if they dataset already exists. If it does, then we are skipping over to the ELSE statement in order to update the table rather than create it. If it doesn’t exist yet, we are creating a new dataset using our table schema information, and then piping all of our data into the ‘Out-PowerBI’ cmdlet and targeting the new dataset and the correct table name we want to load the data into.

If we are updating the table instead, we get the dataset information and save it to a variable. In this instance we are then clearing the existing data from the table (to avoid duplicate data) and then while it’s empty we update the table schema in case we added new columns or changed names or data types. After that process completes we then pipe our data into the ‘Out-PowerBI’ cmdlet in order to load our data into the table.

That is really all there is to it. We now have a new data source in Power BI that contains our data tables. From here we can move into the Power BI web application, and using this new dataset we can create our reports. In the next entry in this series we will focus on how we can build a few simple graphs and create a dashboard.

If you are interested in more information around the Power BI API, that can also be found here: http://docs.powerbi.apiary.io/#

Next post: Automation Series 1, Part 3: Power BI Reporting

Ready to transform your SCSM experience further with automation? View all of the exciting apps Cireson has to offer.